Trending

Over the past few weeks, public figures have questioned whether the online debate around the Voice to Parliament referendum was being manipulated. The Sydney Morning Herald’s Anthony Galloway reported on fascinating data showing that the anti-Voice side of the debate was being led by anonymous accounts (often using hate speech) but no evidence of bots. This spawned even more accusations that these accounts were being “bankrolled”, so I spoke to the source of the research, Queensland University of Technology senior lecturer Dr Timothy Graham about what he took away from the data.

WebCam: You found that there were a lot of accounts that have been created recently and ones that weren’t using their real name. How do you interpret those results? Is this proof that there’s some kind of manipulation going on?

Tim Graham: It could be, it could not be. This is not the first time that we see these exact patterns. We saw the same things happening during the lockdowns in Victoria, for example. We saw it during the big season of bushfires.

The recency of the accounts could mean evidence of inauthentic activity; where you have freshly created [accounts] in the last couple of months, a much higher proportion of them weighing in on one issue, when you look at what those accounts are doing, they’re really only sticking to one or two things of interest.

On the other hand, you also see that, like, there’s this kind of merry-go-round that happens for right-wing Twitter where its accounts get suspended and then they have to create a new one. You see that they have to recreate accounts all the time, or spin up new accounts, so that they can express themselves in different ways. And so that’s the other side of the coin, you know, it may not be suspicious.

If you look at internet research, you see that [online] anonymity — the ability to be pseudonymous, to create personas that allow you to express yourself in ways you can’t otherwise express yourself — is good, healthy.

So this is my long-winded way of saying that it could be the case that these new accounts that are all weighing in on one issue all of a sudden and reflecting the talking points of politicians and the overarching narratives of those parties could be suspicious. Or it could also be the case that that is just attracting people who want to express their opinions but don’t want to identify themselves.

WC: That’s one of the things that makes this kind of thing hard to study, right? There’s no slam dunk, smoking gun showing that this is an astroturfing campaign or not. You use tools that can give us some hints about things that might be consistent with inauthentic behaviour, but inauthentic behaviour is hard to distinguish from people acting in an authentic way — like reacting to a big news event with strong feelings.

TG: Exactly. The fact that [the Voice to Parliament referendum] is so high profile means that it’s this messy mix of genuine political deliberation and interest but also trolling on both sides.

People who just want to weigh in because they know they’re gonna get an immediate response. Look at the reply threads to some of Sally McManus and Virginia Trioli: it’s like nuclear warfare in those threads.

WC: Can I zoom out a little bit? I see accusations of bots and inauthentic behaviour happening online a lot. I think it’s people searching for ways to explain people whose politics might seem completely different to their own. But also, part of it is people’s fear that bad actors are swaying elections, drastically changing public opinion. But as someone who’s done a lot of research in this area, what does the evidence say about how persuasive or how impactful these campaigns are?

TG: There’s not very much evidence that voting behaviour is influenced directly by social media. There is some mixed research, but it’s kind of contextual: for a particular country, in a particular context, at a particular time, it might persuade people’s voting behaviours or their politics. But we can’t overstate its effect.

The problem is that we have this “hypodermic needle” perspective of political communication where it’s like: you go online, you’re exposed to some tweet, you get “injected” with some idea and suddenly it all changes. You’re like “Holy shit, I was wrong the whole time” and you somehow change your perspective — that doesn’t happen.

But, to me, all roads in the land of social media and even in the whole online ecosystem lead to and from Twitter. And here’s where the rubber hits the road for influence. Journalists and editors listen to the way things are framed [on Twitter]. News media listens to the way things are framed by politicians, marketers, they all do this. So what ends up happening is that what happens on Twitter matters because it matters to those who actually matter. If a journalist or politician sees something trending, it can inform the talking points or the editorial guidelines for the way that news is framed the next day.

I think there’s a higher order dynamic. With big events like this, everyone is looking at them, they’re trying to figure out how to frame it, they’re trying to figure out how to use it, how to exploit it. It’s kind of a barometer for public opinion. And then more established actors in the public sphere, mainstream media, politicians, etc, start to talk about it. And that’s when those really big seismic sort of effects can be seen.

WC: I mean if you come across a bot in the wild, they’re not very compelling. You don’t just see a tweet from a bot and, presto, your mind changes. Neither can someone buy a bot army and automatically swing an election. Maybe used in the right circumstances, by the right person with the right message or whatever, it could have an influence — so we need to keep this in perspective, don’t we?

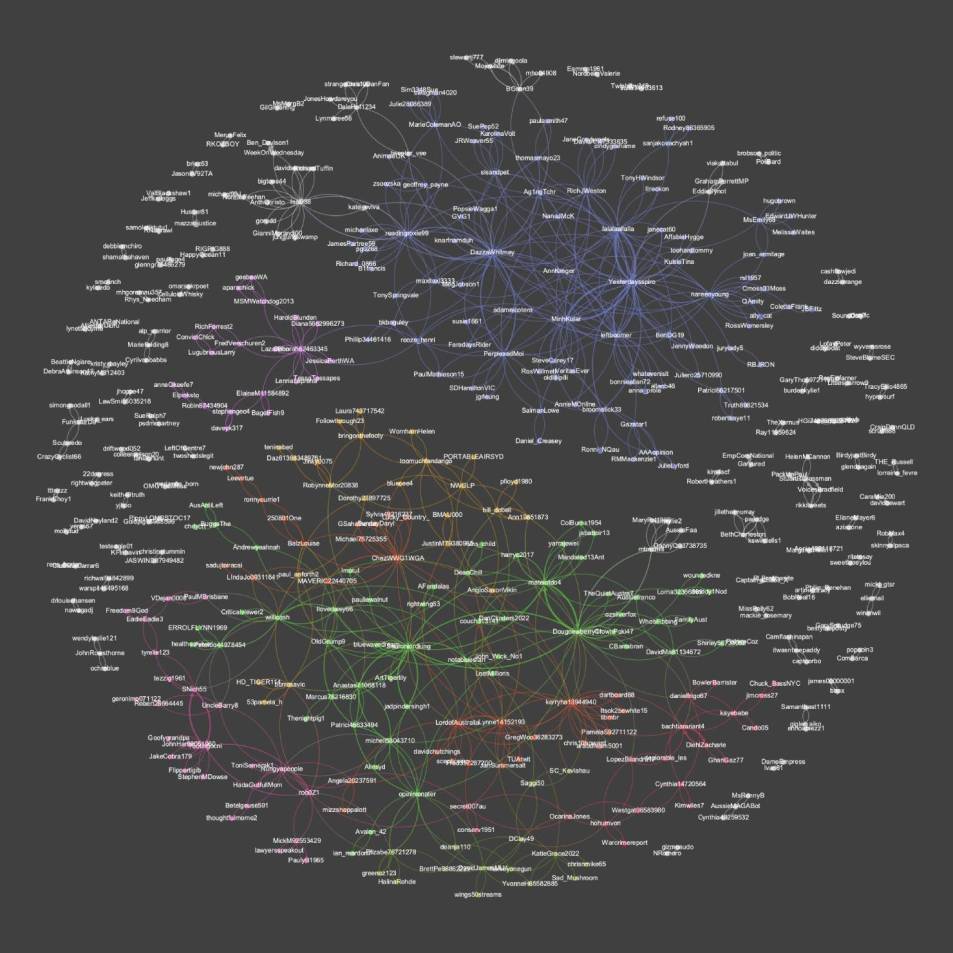

TG: We really do. It’s a pretty powerful idea to think that there is this big bot army that’s funded by some hugely resourced, shadowy actor overseas — I just think that is a really problematic myth. And so the work I’ve done is just to try and provide some evidence. And the evidence that I’ve put out there is boring, it’s like, you know, collect some data, run some bot analysis, do some statistics. And here’s these two graphs that you see in there and they’re basically identical.

It’s an image of accounts that have seemingly coordinated retweeting content around the vote. It basically shows there are two big clusters of accounts that are all doing similar things at a similar time. One cluster is the Yes, and the other is the No. Both sides are strategically using social media to try and push their point of view. So even if you go down to the level of really complicated analysis of digital astroturfing, you get kind of the same result.

WC: Maybe a good way to finish this is to ask what’s the impact of suggesting that there are bots or inauthentic behaviour? Is it important to keep a level head and a healthy amount of scepticism about influence operations to maintain faith in our electoral process and institutions?

TG: It’s a very good thing. In liberal democracies, we do need people to be able to trust what they’re seeing to have some way to inform their perspectives. If you see these accusations flying around all the time, it probably just disengages people, or they think, “Oh, well, you know, but it’s too complex” or “I can’t be going on social media”. That’s a really bad outcome.

It’s basically a conspiracy theory if you’re saying that there’s this shadowy cabal of elite stakeholders who are pulling the strings on this major issue. It’s not that much different to saying the Democrats are run by an elite cabal of blood-drinking paedophiles. It’s pretty dangerous territory.

Hyperlinks

Anti-Voice ‘news’ Facebook page is run by the No camp, but you wouldn’t know it

Speaking of internet skullduggery and the Voice to Parliament referendum… (Crikey)

Voice debate spurs rise in cyber abuse, threats and harassment

… and the tenor of the online debate (The Sydney Morning Herald)

Australian Medical Association calls for national regulations around AI in healthcare

I think there’s going to be a trend to try to introduce AI into every part of our life. I think there also needs to be opposition to accepting substandard services that are “good enough” because they’re powered by AI (ABC)

Twitter accused of responding ‘to tyrants quickly’ but ignoring Australian government

A cynical man would point out that Twitter is purely responding to its business interests. An even more cynical man would point out that these interests are aligned with autocracy (Guardian Australia)

‘Share far and wide’: how the far right seeds and spreads drag storytime protests

Don’t miss the kicker on this piece (Crikey)

Content Corner

I was thinking about the Intelligencer essay “How much of the internet is fake?” when I stumbled across a con over the weekend.

Temu is a Chinese insurgent e-commerce app that’s quickly become one of the most popular apps in the world. It combines familiar shopping apps (like Australia’s The Iconic or, more closely, Ali Express) with a heavier focus on algorithmic choice, giving users customised product selections from their enormous catalogue of generally quite cheap, mass-produced items.

Beyond the company’s ravenous appetite for online advertising, Temu’s sudden popularity is also due in part to its referral affiliate marketing program that incentivises users to induce others to join up. And apparently the company doesn’t try too hard to figure out how real these people are.

People, it seems, are coordinating to click on each other’s Temu invites, creating fake accounts, in order to get cheap or free products in return. It’s so popular that r/TemuAustralia, an online community dedicated to the ruse, was the fastest-growing subreddit on the website in the past week. TikTok users are ingeniously posting videos of blank screens just to open up a comment section where people can trade codes to sign up.

What makes this all even more ridiculous is that Temu’s referral program is, by some accounts, also a bit dodgy. One OzBargain user wrote that the company plays tricks to make it seem like a user is close to getting a discounted or free product, but it’s not as easy as it seems.

In summary: people are using digital platforms to work together to sign up fake accounts to get misleading discounts on low-quality products being offered by an e-commerce company that may or may not care whether those accounts are real or not. Sometimes, the internet economy just feels like turtles all the way down.

That’s it for WebCam this week! I’ll be back soon. In the meantime, you can find more of my writing here. And if you have any tips or story ideas, here are a few ways you can get in touch.

So this week i read this. I cant vouch for Haaretz but they do mention it has been worked on by other orgs who do have strong reputations . Avatars, are the point of interest, and if you go to any public facing twitter account that is in any way controversial – try i dont know shoebridge, bandt, for starters with negative responses by a zillion blue check accounts, but also go to right wing conspiracy accounts and they have floods of blue check supporters.. when you find someone without a tick its worth mentioning it to them. “Who would pay for that!” They snort. Radio silence when you tell them to go have a look how many of the tweets replies are blue ticks, all paid for. Theyre basically alone, with a small handful of likeminded citizens spread around the globe. The rest is hype.

https://www.haaretz.com/israel-news/security-aviation/2022-11-16/ty-article-static-ext/the-israelis-destabilizing-democracy-and-disrupting-elections-worldwide/00000186-461e-d80f-abff-6e9e08b10000